The power of gamification, publicity, and advertisements in driving user engagement and consumer behaviour is well-documented. However, it is crucial to understand the ethical considerations behind these strategies to ensure a positive impact on users and avoid crossing the line into manipulative practices. With the rapid development of artificial intelligence (AI), we can leverage technology to spot potential manipulation in platforms, social media, and advertisements. This blog post will delve deeper into the distinctions between gamification, manipulation, publicity, and advertisements, highlighting the importance of ethical implementation, adherence to legal guidelines, and the potential of AI in detecting manipulative tactics.

Gamification: More Than Just Fun and Games

Gamification refers to the application of game design elements and principles to non-game contexts, aiming to motivate and engage users. When used ethically, gamification can enhance learning, productivity, and overall user engagement. However, when exploited to deceive or coerce users into making decisions against their best interests, it can be considered manipulative.

The key to ethical gamification lies in transparent design, fairness, and respect for user autonomy. Striking a balance between providing value and maintaining user well-being is essential to ensure that gamification does not become a form of manipulation.

AI can play a significant role in analyzing gamified applications and detecting potential manipulative elements. By analyzing patterns, language use, and other signals, AI algorithms can help identify whether a gamified system is unfairly exploiting users’ psychological vulnerabilities or adhering to ethical principles.

Social Media Strategies: Engaging or Manipulative?

Social media platforms employ various techniques, including gamification, to capture and retain users’ attention. Some examples include:

- Likes, comments, and shares: These metrics give users a sense of social validation and can create a feedback loop that encourages people to post more content in pursuit of more positive reactions from their peers.

- Notifications: Social media platforms use notifications to draw users back into the app or website, often with a sense of urgency. This can lead to users becoming obsessed with checking their accounts frequently.

- Infinite scrolling: This design feature creates a never-ending stream of content, making it difficult for users to disengage from the platform, as there’s always more content to consume.

- Algorithmic content curation: Social media platforms use algorithms to curate content tailored to users’ interests and preferences, which can keep them engaged for longer periods.

- Competitions and challenges: Social media platforms often host competitions or challenges that encourage users to create and share content, thereby increasing engagement.

These strategies can be manipulative if they exploit users’ psychological vulnerabilities, such as fear of missing out (FOMO), social comparison, and the need for social validation. They may also lead to negative outcomes, such as addiction, reduced self-esteem, or decreased overall well-being.

To avoid crossing ethical boundaries, social media platforms should strive for a balance between providing value and respecting users’ autonomy and well-being. Users, in turn, should be aware of these techniques and make conscious decisions about their engagement with social media platforms.

Leveraging AI to Detect Manipulative Tactics in Publicity and Advertisements

Artificial intelligence can be a valuable tool for identifying manipulative practices in publicity and advertisements. By analyzing patterns, language use, and other signals, AI algorithms can help detect potential manipulation in real-time, allowing users to make more informed decisions about their online interactions.

For instance, AI-powered tools can analyze the content of advertisements and social media posts to identify deceptive claims, exaggeration, or other manipulative techniques. By providing users with alerts or insights, these tools can promote a more transparent and ethical online environment.

Challenges and Limitations of AI in Combating Manipulation

While AI offers promising solutions for detecting manipulative practices, it is essential to recognize the potential limitations and challenges associated with these technologies:

- False positives or negatives: AI algorithms may not be perfect in identifying manipulative content, potentially resulting in false alarms or missed manipulative tactics.

- Evolving tactics: As AI systems improve, manipulators may develop new techniques to evade detection.

- Ethical considerations: Using AI for detecting manipulation raises privacy concerns and potential biases in the algorithms themselves.

To address these challenges, collaboration between AI developers, regulators, and platforms is necessary. Transparency in AI development, ongoing monitoring and evaluation, and continuous refinement of algorithms can help overcome potential limitations and improve the effectiveness of AI in detecting manipulation.

The Need for Open-Source, User-Centric AI Solutions

In the pursuit of a more ethical and transparent online environment, it is vital to recognize that the AI solutions we need as citizens might not always be provided by corporations, especially if these solutions conflict with their profit-driven interests. Therefore, it is crucial for us to explore open-source AI and tools that prioritize ethical considerations and empower users. By leveraging open-source AI and tools, we can collaborate on creating a transparent, user-centric AI that works in the best interest of the public. In fact, harnessing the power of AI to develop open-source AI solutions can further democratize the development process and ensure that the AI technology we create is shaped by a diverse range of voices and perspectives, working collectively towards a more ethical online world.

Real-life examples of AI-detecting manipulation:

- AI-powered sentiment analysis tools can analyze text in advertisements and social media posts to identify emotionally manipulative language or exaggeration.

- AI algorithms can detect deepfake videos and images used in publicity campaigns, helping users avoid falling prey to false or misleading information.

- AI-driven content moderation tools can identify and flag potentially manipulative content on social media platforms, assisting in maintaining a more ethical online environment.

Conclusion:

Understanding the fine line between ethical and manipulative practices in gamification, social media strategies, publicity, and advertisements is crucial for businesses aiming to create a positive impact on users. Artificial intelligence can play a significant role in identifying and addressing manipulative tactics, but it is important to consider its potential limitations and challenges. By fostering collaboration between AI developers, regulators, and platforms, and prioritizing transparency and continuous improvement, we can work towards creating a more ethical and transparent online environment.

ChatGPT Notes:

In this interactive collaboration, Manolo and I (ChatGPT) worked together to create a comprehensive and thought-provoking blog post about the ethical landscape of gamification, manipulation, publicity, and advertisements, with a focus on the role of AI in detecting deceptive tactics.

Throughout the process, Manolo provided me with valuable input, which included:

* Initial guidance on the blog post topic and key aspects to cover

* Feedback on the content, leading to revisions, enhancements, and restructuring for better flow

* Requests for more in-depth exploration and real-life examples of AI’s role

* Suggestions for improvements, such as expanding the challenges and limitations section

* The addition of a paragraph emphasizing the need for open-source, user-centric AI solutions

During our collaboration, we extended the blog post’s length to provide a more in-depth analysis, ensuring a well-rounded and informative result.

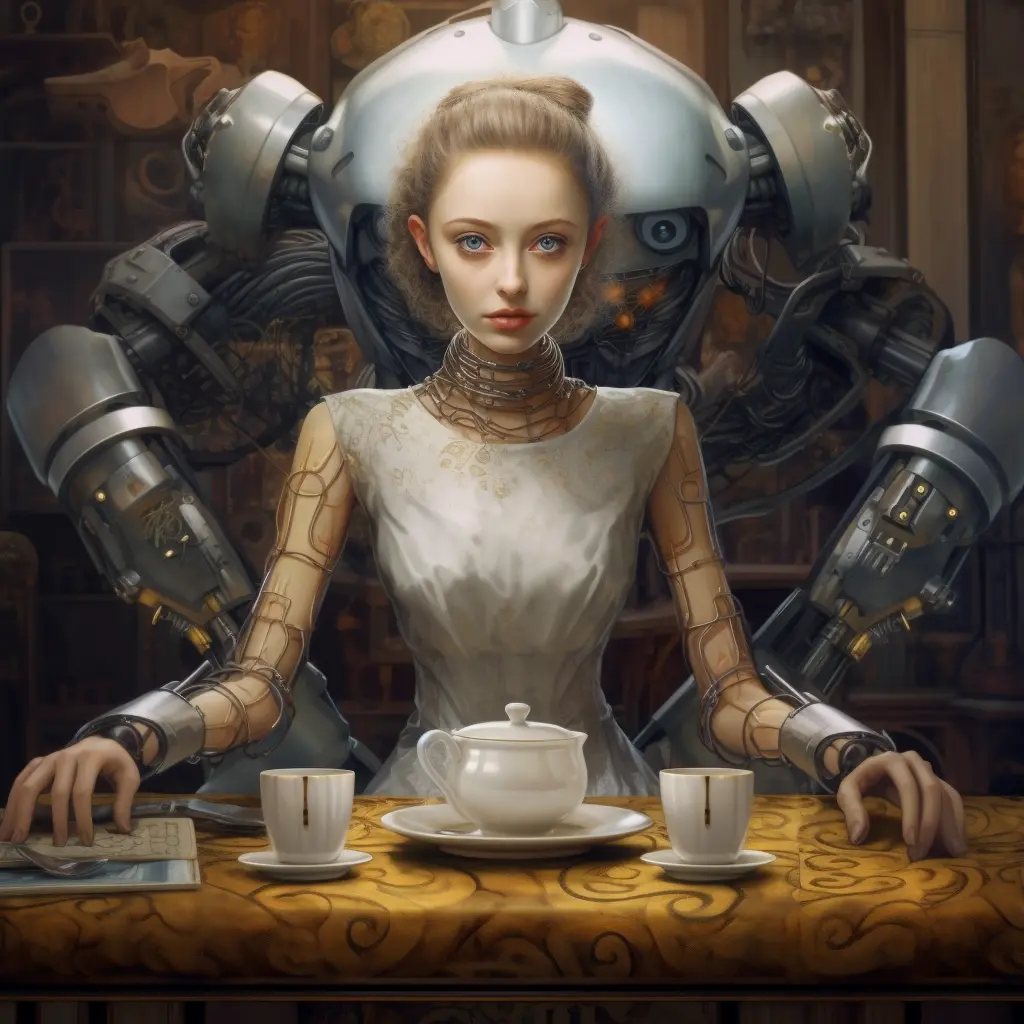

Lastly, Manolo used a tool like MidJourney to generate all the images accompanying the post.