As artificial intelligence (AI) advances at a rapid pace, Europe stands at a critical crossroads: balancing innovation with the need for regulation. The European Union’s proposed AI Act aims to protect citizens from risks such as privacy violations, discrimination, and emotional manipulation. However, some argue (me) that these stringent regulations might hinder our ability to learn how to safeguard ourselves against the very technologies that will define our future.

Are We Over-Regulating AI Before We’ve Fully Explored Its Potential?

Critics suggest that the AI Act adopts a highly cautious, preemptive stance, potentially stifling innovation before technologies have been thoroughly explored. For example, OpenAI’s advanced voice mode enables natural, emotionally intelligent conversations between humans and AI. Yet, due to concerns over emotional manipulation, this technology faces strict restrictions in Europe. According to TechRadar, these limitations prevent European users from experiencing and understanding the capabilities and risks of such AI tools.

This raises an essential question: Are we genuinely protecting ourselves, or are we missing the opportunity to learn how to engage safely with these emerging technologies? By imposing strict regulations upfront, the EU may be deciding what’s “safe” or “dangerous” without allowing its citizens to experiment and understand the technologies firsthand. This approach could underestimate the public’s capacity to learn and use AI responsibly. Historically, societies have navigated new risks through engagement, trial, and error.

The Case for Cautious Regulation

On the other hand, proponents of the EU’s regulatory approach argue that setting boundaries before potential harms become realities is crucial. AI technologies have the potential to influence behavior, infringe on privacy, and perpetuate biases on an unprecedented scale. The Cambridge Analytica scandal, for instance, highlighted how data misuse can impact democratic processes. By establishing clear rules and ethical guidelines now, the EU aims to prevent misuse and protect fundamental rights.

Supporters contend that unregulated experimentation could lead to unintended consequences that are difficult to reverse. They point to past technological advancements where the lack of early oversight resulted in long-term societal issues, such as widespread data breaches and erosion of privacy. From this perspective, the EU’s cautious stance is a responsible approach to ensuring that AI develops in alignment with European values and human rights standards.

The Balance Between Protection and Innovation

Finding the right balance between fostering innovation and ensuring protection is no easy task. Over-regulation could hinder technological progress and leave Europe lagging in AI development. It might also prevent citizens from gaining valuable experience in interacting with AI, potentially making them more vulnerable in the future. The United States, for example, has taken a more permissive approach, allowing users to explore AI technologies and learn through real-world applications, which could accelerate innovation but may also expose users to unmitigated risks.

Conversely, under-regulation could expose society to significant risks, including manipulation, loss of privacy, and the amplification of social inequalities. AI algorithms have already shown instances of bias, such as facial recognition systems misidentifying people of color more frequently than white individuals. These concerns underscore the need for thoughtful regulation.

Consequences of Over-Regulation

Those cautioning against excessive regulation warn of long-term risks. If Europeans don’t engage with AI technologies now, they may find themselves unprepared in a world where AI is deeply integrated into everyday life. Over-regulation could widen the gap between those who are proficient in AI and those who are not, both within Europe and globally. A study by McKinsey highlights that early adopters of AI technologies are likely to gain significant economic advantages.

Early adopters can automate a substantial portion of work activities, potentially increasing labor productivity by 15% to 40% compared to traditional methods.

Relying solely on external regulations might also prevent individuals from developing personal strategies and skills to navigate AI safely. The tech industry often embraces the philosophy of “fail fast, fail early,” suggesting that early experimentation—even if it leads to mistakes—is crucial for learning and improvement. Applying this mindset to AI could allow Europe to innovate responsibly while still gaining the necessary experience to manage future challenges.

A Call for a Balanced Approach

So, what might a balanced approach look like? One possibility is the implementation of regulatory sandboxes—controlled environments where companies and individuals can test AI technologies under the supervision of regulators. The United Kingdom’s Financial Conduct Authority has successfully used such sandboxes to foster innovation in fintech while maintaining consumer protection. This model could allow for experimentation and learning while keeping potential risks in check.

Another option is to focus on adaptive regulations that evolve with the technology. Rather than imposing strict rules that may quickly become outdated, the EU could develop a flexible framework that addresses current concerns while remaining open to future adjustments. Engaging stakeholders from various sectors, including technologists, ethicists, and the public, could help create more nuanced regulations.

Conclusion: Empowerment Through Experience and Safeguards

Empowering citizens to engage with AI technologies responsibly requires both access and education. By allowing more freedom to interact with AI, complemented by robust educational initiatives about its risks and benefits, the EU can prepare its citizens for a future where AI is ubiquitous. Estonia, for instance, has integrated digital literacy into its education system, ensuring that citizens are well-versed in navigating new technologies.

At the same time, safeguards might be necessary to protect against genuine threats. Regulations should aim to prevent harm without unnecessarily stifling innovation or personal development. Striking this balance is challenging but essential for fostering a society that is both technologically advanced and ethically grounded.

What Do You Think?

Is it time for Europe to rethink its approach to AI regulation? Should there be more room for experimentation and personal learning, or are strict safeguards essential for a safe future? The answer may lie in finding a middle ground that embraces innovation while protecting fundamental rights not by listening to our fears.

ChatGPT Notes:

In this collaborative effort, Manolo and I (ChatGPT) worked together to craft a compelling blog post addressing the EU’s AI regulations and their impact on experimentation.

- Key aspects of our collaboration included:

- Manolo’s insights on European regulations and how they hinder technological growth.

- Feedback and revisions to strengthen arguments, making the post more engaging.

- Enhancing the post’s structure, adding emotional depth and real-world examples.

- Incorporating a provocative call to action.

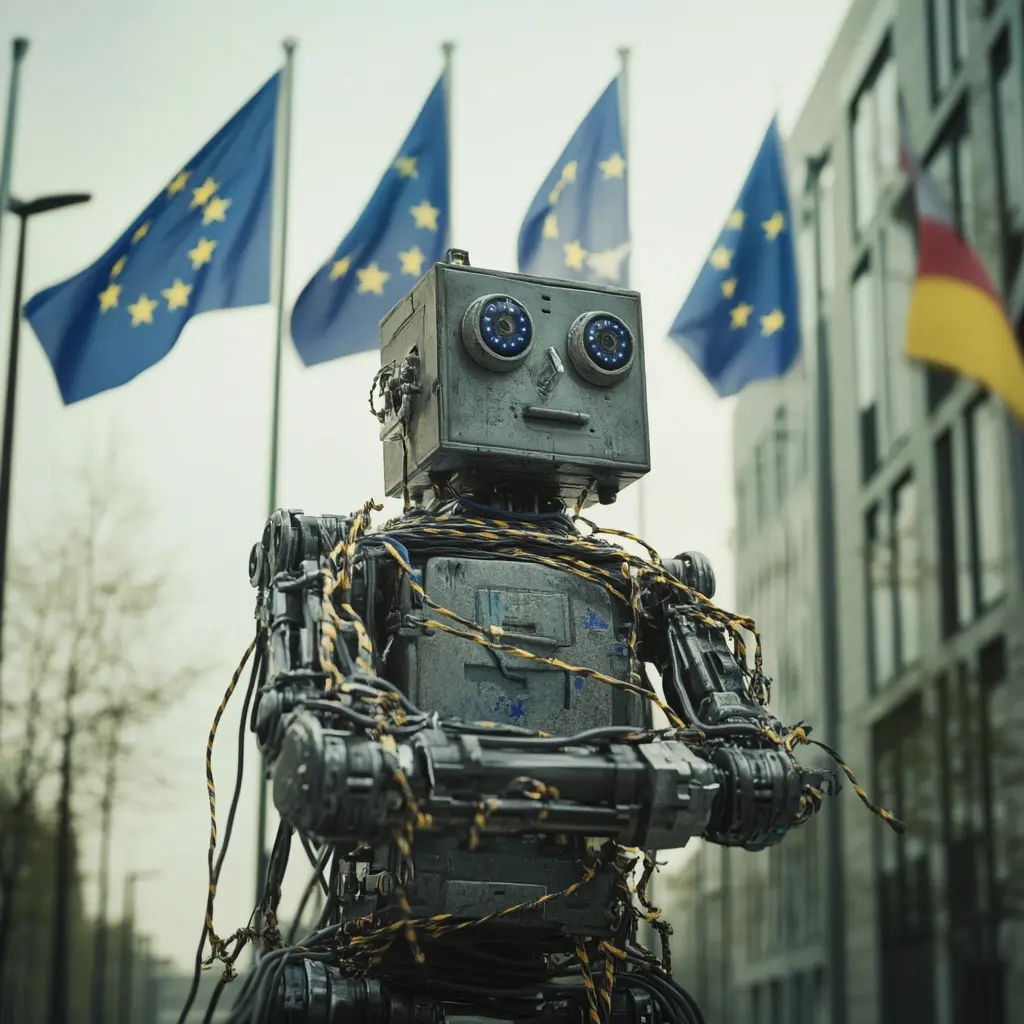

Manolo used MidJourney to generate visuals that complement the final blog post.