In the rapidly evolving world of artificial intelligence, the ability to discern truth from fiction in AI-generated content is not just a skill – it’s a necessity. This guide delves into the realm of ChatGPT, a groundbreaking neural network, and addresses a critical challenge: teaching it to adhere to the truth. With AI fabrications becoming increasingly sophisticated, understanding how to detect and correct these digital distortions is more important than ever.

Imagine relying on ChatGPT for crucial information, only to discover layers of neural network fabrications cleverly woven into its responses. This is where we step in. Equipped with strategies like cross-referencing and validation, we’re embarking on a mission to enhance ChatGPT’s accuracy and reliability. From identifying subtle AI untruths to implementing corrective measures, this post is your roadmap to navigating the complex landscape of AI honesty.

Stay with us as we unveil practical steps to ensure that your interactions with ChatGPT are based on facts, not fictions. Whether you’re a tech enthusiast, a researcher, or simply AI-curious, you’ll find invaluable insights into making AI a trustworthy companion in your digital journey.

Understanding AI Hallucinations

When we talk about AI hallucinations, it’s not a sci-fi scenario where robots daydream. It’s something more grounded, yet equally intriguing. Imagine ChatGPT as a storyteller, weaving tales from threads of data. Sometimes, these stories are factual tapestries, rich with accurate information. Other times, they’re more like patchworks of fiction, stitched together with fragments of truth and imagination.

But why does this happen? At its core, ChatGPT is an echo chamber of human input. It learns from a vast pool of text data, absorbing the patterns, biases, and, sometimes, inaccuracies of the information it’s fed. Like a diligent but somewhat naïve student, it doesn’t always differentiate between reliable sources and creative fiction. This is where the art of detecting these fabrications becomes crucial.

To ground this concept, consider the words of Katherine Johnson, a pioneering African-American mathematician whose calculations were critical to NASA’s space missions. She said, “We will always have STEM with us. Some things will drop out of the public eye and will go away, but there will always be science, technology, engineering, and mathematics.” Just as Johnson navigated the complexities of space, we must navigate the intricacies of AI, discerning the science and mathematics of truth within the engineering of its language.

The Impact of AI Misinformation

In the labyrinth of digital information, AI-generated content by ChatGPT can ripple through our world with significant impact. It’s not just about getting a fact wrong; it’s about understanding how these ‘digital whispers’ can shape perceptions, decisions, and even policies. The line between reality and fabrication, once clear, becomes blurred in the AI context.

Consider a scenario where an AI-generated piece of medical advice, based on fabricated references, is taken at face value. The consequences could range from minor misunderstandings to severe health risks. It’s a stark reminder of the power of information, and the responsibility that comes with wielding it, especially in sensitive fields like healthcare or law.

The ethical implications are profound. Misinformation can inadvertently perpetuate biases or spread untruths, especially concerning minority groups and marginalized communities. It harks back to the wisdom of Audre Lorde, a writer and civil rights activist, who said, “It is not our differences that divide us. It is our inability to recognize, accept, and celebrate those differences.” In the realm of AI, this means actively working to recognize and rectify biases, ensuring that the technology is inclusive and truthful.

Our journey with ChatGPT is about more than just correcting a few errant lines of code; it’s about safeguarding the integrity of information in a world increasingly reliant on AI. As we peel back the layers of AI fabrications, we’re also laying the groundwork for a more ethical and truthful digital future.

Strategies for Teaching Truth to ChatGPT

Teaching ChatGPT to discern and stick to the truth involves more than just programming; it’s about guiding an AI through the complexities of real-world information. This section outlines practical, straightforward strategies to enhance ChatGPT’s accuracy and reliability.

1. Cross-Referencing and Consistency Checks:

- The cornerstone of teaching ChatGPT truth is cross-referencing its outputs with verified information. For instance, if ChatGPT provides historical data, verify it against trusted historical databases or sources.

- Consistency over time is a critical indicator. Regular checks for consistent responses in similar scenarios can reveal AI inaccuracies.

2. Training and Feedback Mechanisms:

- Interactive training is key. For example, if ChatGPT provides an incorrect fact about climate change, correcting it with accurate data helps refine its responses.

- Encouraging diverse interactions with ChatGPT, such as asking about various topics, helps it understand the nuances of accurate information.

3. Leveraging AI Oversight Tools:

- Tools that analyze and flag potential inaccuracies in ChatGPT’s responses are invaluable. These tools can quickly identify anomalies in data or logic.

- Consider the use of algorithms like xFakeBibs to systematically spot AI fabrications, especially in complex topics like scientific research.

4. Incorporating Diverse Perspectives in AI Development:

- Diverse development teams can spot and correct biases more effectively. This leads to a more balanced and truthful AI.

- Expert involvement from different fields ensures that ChatGPT’s learning is well-rounded, reducing misinformation risks.

By implementing these strategies, we’re not just tweaking an AI system; we’re nurturing a digital entity capable of handling truth with as much care as humans do. The goal is to transform ChatGPT into a reliable source of information, one that mirrors the factual integrity of the real world.

Conclusion: Embracing AI with Caution and Curiosity

Charting the Future: AI Truthfulness and Our Role

As we reach the end of our journey through the realms of AI and truth, it’s clear that the path ahead is both challenging and exciting. The quest to teach ChatGPT to tell the truth is not just a technical endeavor; it’s a reflection of our larger pursuit of knowledge and integrity in the digital age.

The implications of this journey are vast. As AI continues to weave its way into the fabric of our lives, our role in guiding its development becomes increasingly significant. We stand at the crossroads of innovation and ethics, where every step we take in training AI like ChatGPT shapes the future of information dissemination.

Reflecting on the Future:

- What will the landscape of AI look like when it’s infused with the rigor of truthfulness?

- How will our interactions with technology evolve as AI becomes more reliable and transparent?

- In what ways can we continue to foster a culture of ethical AI development, ensuring that the benefits of this technology are accessible to all?

These are open questions, inviting us to ponder, discuss, and explore. The journey doesn’t end here. It’s an ongoing dialogue, a continuous exploration of how we can harmonize the power of AI with the values of truth and integrity.

As we wrap up this guide, let’s not just view it as a set of instructions. Let it be a spark that ignites curiosity, a call to action for each of us to engage with AI responsibly and thoughtfully. Let’s embrace the potential of AI, but with the wisdom to guide it towards a future that reflects our highest ideals.

So, dear reader, as you navigate the digital world, remember: your interactions with AI, your feedback, and your insights are shaping the future. The story of AI and truth is still being written, and you are part of it.

ChatGPT Notes:

In this creative collaboration, Manolo and I (ChatGPT) teamed up to craft a compelling blog post on teaching truth to AI.

- Manolo’s insightful guidance shaped the journey, starting with a clear vision for the topic.

- He provided constructive feedback on titles, outlines, and each blog section, enhancing the content’s depth and clarity.

- Our iterative process included refinements for SEO, tone, and engagement, ensuring the post resonated with readers.

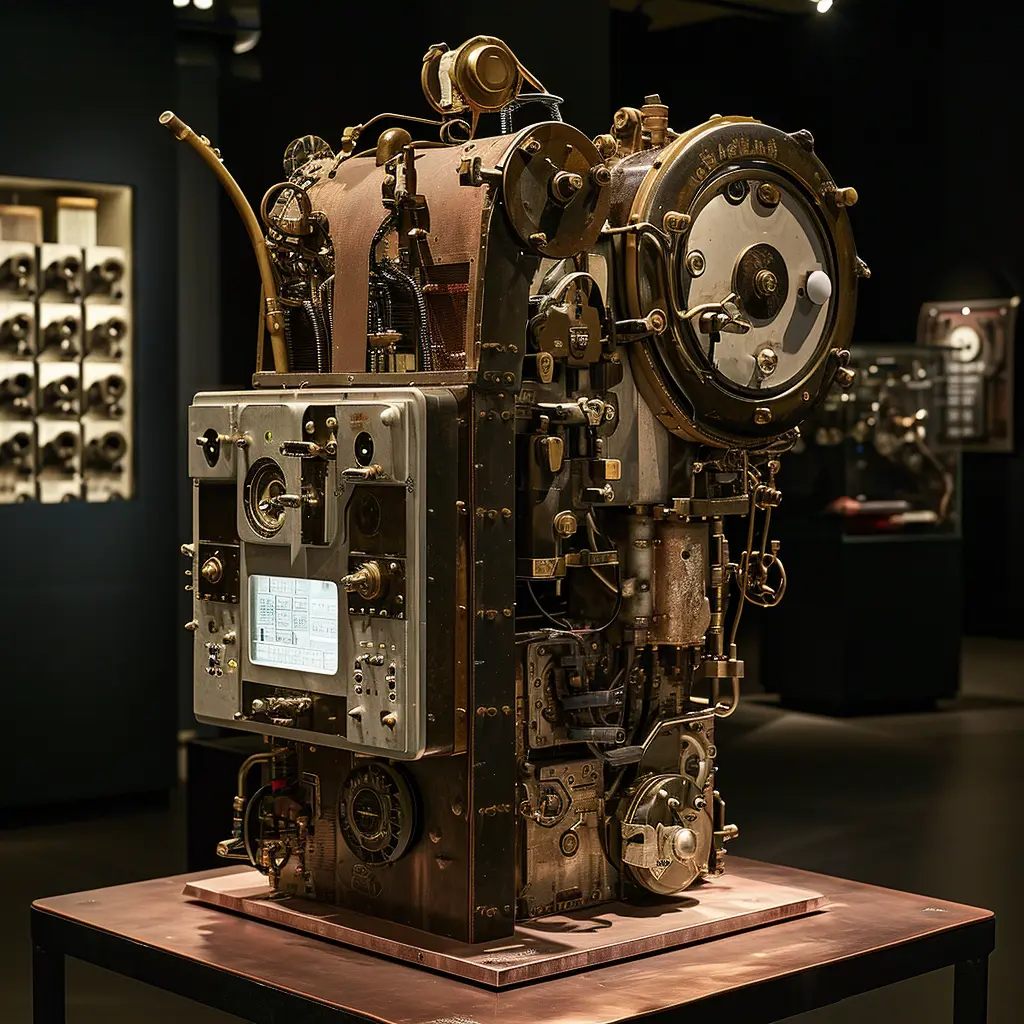

- Manolo’s strategic use of a tool like MidJourney brought visual flair to the post, enriching the reader experience.

Together, we navigated the nuances of AI truth-telling, merging Manolo’s expertise with my AI capabilities for a unique and informative piece.