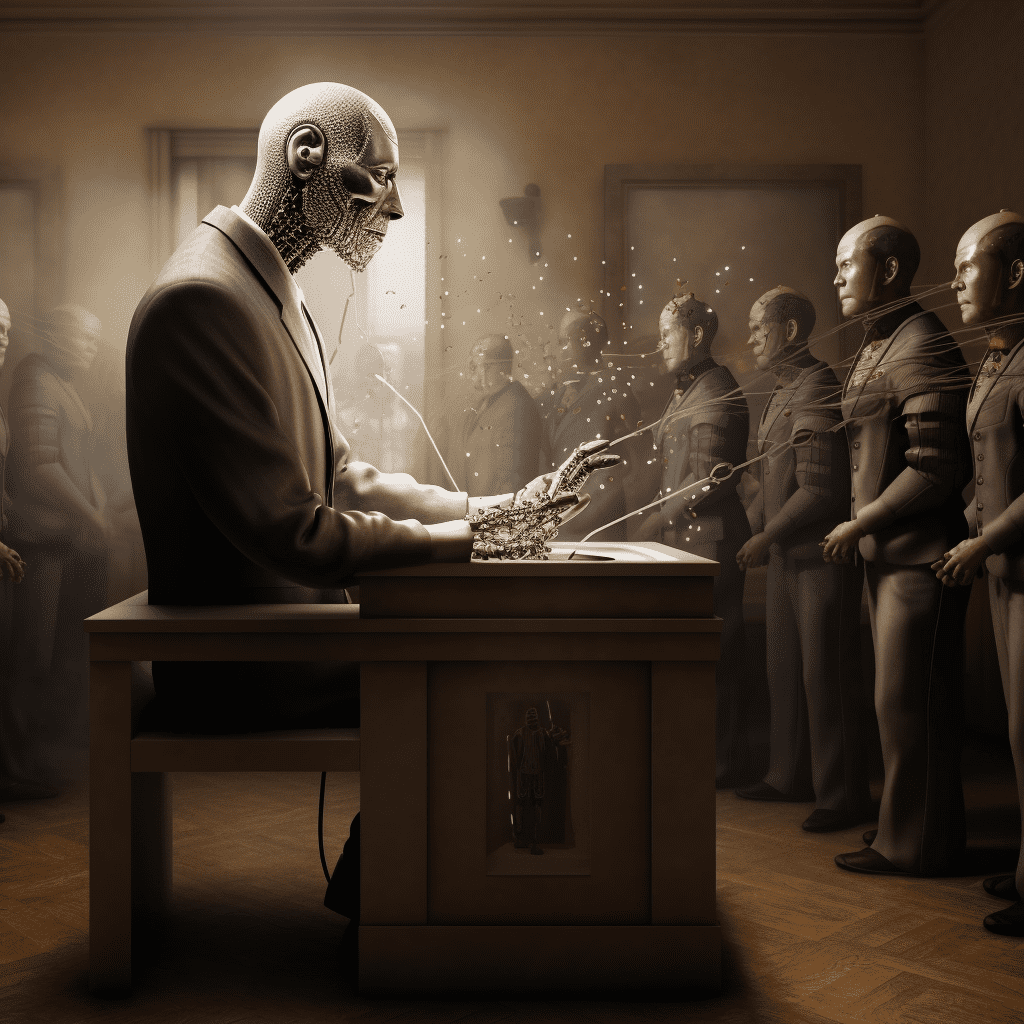

Imagine a world where an invisible puppeteer can effortlessly control what we think, feel, and believe. This is no mere flight of fancy; it’s the potential reality we face as we continue to develop and rely on AI technology like ChatGPT. While the potential for manipulation is undeniable, the solution may lie in harnessing the power of AI itself to ensure the responsible use of this technology. In this post, we’ll explore the ethical implications of ChatGPT and the power of manipulation, while considering innovative ways to maintain the integrity of information in the digital age.

ChatGPT’s ability to mimic human writing styles and generate content on various topics has raised concerns about the potential for spreading disinformation and manipulating public opinion. Picture an unscrupulous actor using ChatGPT to create fake news articles that support their agenda, or subtly altering content to exploit the biases of different target audiences. This invisible puppeteer, if left unchecked, could sway elections, incite social unrest, and undermine trust in the information ecosystem.

However, it is important to recognize that not all AI-generated content is inherently malicious or misleading. In fact, AI has the potential to be a valuable tool for disseminating accurate information and contributing to meaningful discussions. The key, therefore, is to ensure the responsible use of AI technology like ChatGPT, while maintaining the freedom of speech that allows for innovation, creativity, and the exchange of ideas.

My suggested solution is to leverage the power of AI itself to verify the accuracy and credibility of the information we consume. By implementing AI-powered fact-checking systems, we can identify and counteract disinformation, promoting a more accurate and reliable flow of information. This approach not only recognizes the potential value of AI-generated content but also uses AI technology to enhance the credibility of the information ecosystem.

To further ensure transparency, companies and governments could be required to disclose their use of AI-generated content and the extent of its influence on decision-making. This would allow consumers to make informed decisions about the information they consume while encouraging responsible AI usage.

Moreover, we as individuals must remain vigilant and critical of the information we encounter. By developing the skills to spot disinformation and understanding the sources of information, we can prevent the invisible puppeteer from gaining control over our thoughts and beliefs.

In conclusion, the ethical dilemma of ChatGPT and the power of manipulation can be addressed by embracing innovative solutions that leverage AI technology for good. By focusing on fact-checking, transparency, and individual vigilance, we can ensure the responsible use of AI-generated content and create a more trustworthy information landscape, where both human and AI-generated content can coexist and contribute to meaningful discourse.

Prompt Engineering Notes:

I created this blog post through a dialogue with ChatGPT-4. Initially, I disagreed with ChatGPT’s perspective, so I shared my viewpoint and requested its feedback on my vision. The final post reflects a collaborative effort that incorporates both our ideas.