Would You Trust an AI That Can’t Explain Itself?

Imagine a doctor using AI to diagnose a patient—but no one knows how the AI reached its conclusion. Would you trust it?

This is the challenge of black-box AI, where powerful models make decisions without revealing their reasoning. Enter Explainable AI (XAI)—a movement towards AI systems that are transparent, interpretable, and accountable.

Not to Be Confused with Elon Musk’s X.AI

Explainable AI (XAI) is not the same as Elon Musk’s X.AI (https://x.ai/). While Musk’s project focuses on developing advanced AI models, XAI is about making AI more transparent and understandable—regardless of who creates it.

What is Explainable AI (XAI)?

Explainable AI (XAI) refers to methods and techniques that make AI decision-making understandable to humans. Instead of relying on blind trust, XAI reveals the logic behind AI’s choices, making it easier to detect errors, bias, or unfair outcomes.

Why XAI Matters

1. Trust & Accountability – When AI makes critical decisions (e.g., in healthcare or finance), understanding how it reaches conclusions builds trust.

2. Bias & Fairness – AI models can unintentionally inherit biases. XAI helps uncover and correct them before they cause harm.

3. Regulations & Compliance – Many industries now require AI transparency to ensure ethical and legal decision-making.

How XAI Works: Breaking Down AI’s Thought Process

There are several techniques that make AI more explainable:

• Feature Importance – Highlights which factors most influenced a decision.

• Decision Trees & Rule-Based Models – Uses simplified, human-readable models to mimic AI decision-making.

• Counterfactual Explanations – Shows what would have needed to change for a different AI outcome.

The Challenge: Accuracy vs. Explainability

The most powerful AI models (like deep learning) are often the hardest to explain. While simpler models improve transparency, they can sacrifice performance. The challenge is to balance accuracy with interpretability—something researchers are actively working on.

The Future of Explainable AI

As AI becomes more deeply embedded in society, explainability will no longer be optional—it will be a necessity. Researchers are developing new ways to make even complex models interpretable without compromising performance.

What Do You Think?

Should AI always be explainable, or is some level of mystery acceptable in advanced models?

🚀 Want to learn more about AI ethics? Check out our Manifesto for ehical AI development and deployment.

ChatGPT Notes:

In this collaboration, Manolo and I (ChatGPT) worked together to create a highly engaging and SEO-optimized blog post about Explainable AI (XAI) and AI transparency.

• Manolo provided key input, including:

• The initial topic and focus on making AI decision-making understandable.

• Feedback on clarity, engagement, and SEO improvements.

• Requests for a stronger title, keyword-rich subheadings, and a compelling CTA.

• Enhancements for readability, storytelling, and trust-building.

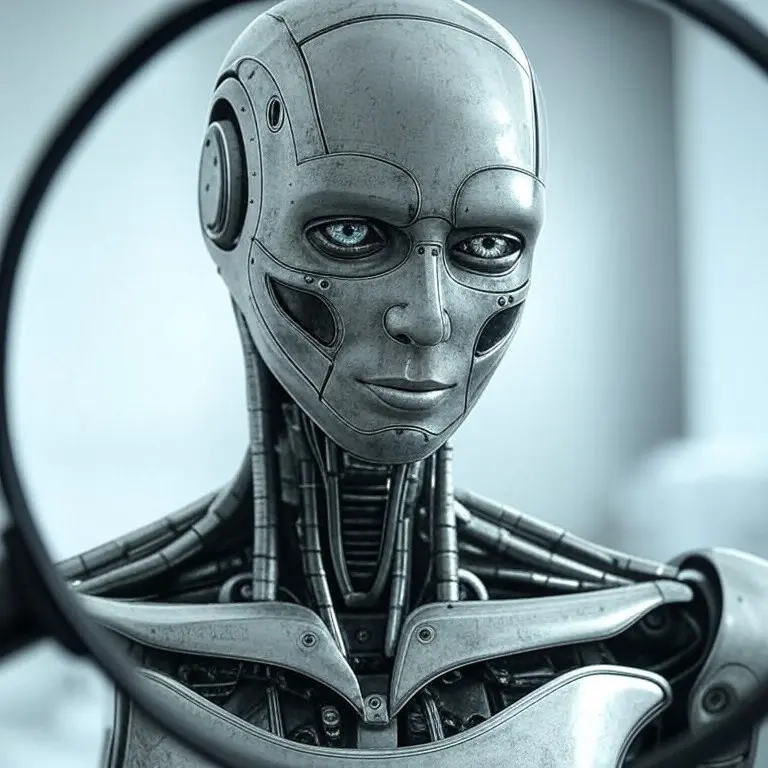

Through revisions, we improved the post’s SEO score, refined its structure and impact, and ensured it aligns with Manolo’s content strategy. Additionally, AI-generated images were created to complement the post visually. 🚀