Beyond Mimicry – Defining Our Edge in a World of Advanced AI

The question of whether AI can truly “doubt” like Descartes was just the opening gambit. As artificial intelligence evolves from skilled mimic to potentially alien forms of cognition, a more profound challenge confronts us: What is the irreducible core of human uniqueness? More critically, in a future interwoven with AI far more capable than today’s, how do we not only identify but actively capitalize on that core, ensuring human thriving alongside intelligences that may operate on entirely different principles? This isn’t just about what AI can learn; it’s about the wisdom of what it should learn, the implications of its inherent “otherness,” and how our own, often messy, human traits might be our greatest assets.

1. Qualia: The Unseen Spectrum of Lived Experience

- The Human Algorithm: We live in a world painted with qualia – the irreducible, first-person quality of experience: the sharp tang of lemon, the profound ache of nostalgia, the quiet hum of contentment. This subjective tapestry is not an optional overlay; it’s fundamental to our understanding, empathy, decision-making, and ability to create deep meaning.

- The AI’s Alien Lens: Current AI, while adept at pattern recognition (identifying “lemon” or “nostalgic themes”), doesn’t experience these states. It processes data, while we live an experience. Even if an AI develops “as-if qualia”—highly sophisticated simulations of emotional understanding for tasks like advanced customer service or companionship—this remains a high-fidelity operational model, not a subjective reality. Its “understanding” is an algorithmic echo.

- The Human Edge & AI’s Path:

- Capitalizing: Human uniqueness here is paramount in fields demanding deep empathetic connection and interpretation of subjective states: therapeutic roles, nuanced artistic criticism, experience design (UX/CX), and any domain requiring genuine interpersonal intuition. Imagine AI identifying patient symptoms, while human doctors connect on the level of suffering and hope.

- Should AI “Feel”? The pursuit of AI qualia is a minefield. While it might theoretically foster deeper AI “understanding,” it risks creating entities capable of suffering or unpredictable “moods.” Perhaps AI’s strength lies in its lack of qualia, allowing for detached, objective analysis in high-stakes, emotional situations where human bias is a risk. Our qualia are a “constraint” leading to empathy but also potential subjectivity; AI’s lack thereof is a different constraint enabling objectivity.

2. Genuine Creativity & Originality: From Pattern to Paradigm Shift

- The Human Algorithm: Human creativity often involves a “spark” – a leap beyond logical derivation into truly novel territory (H-creativity). It’s driven by intuition, emotional investment, lived experience, and even productive “errors” or breaking established conceptual frames. We don’t just recombine; we re-contextualize and invent new paradigms.

- The AI’s Alien Lens: Generative AI dazzles with its ability to produce art, music, and text by learning and interpolating from vast datasets. This is powerful “P-creativity” (psychologically novel to us) but typically lacks the intent, self-aware purpose, or the lived experiential grounding of human originality. It excels at exploring variations within learned styles, but paradigm shifts are another matter. We must prepare for AI to potentially develop “alien creativity”—outputs optimized for criteria or patterns unfathomable or initially valueless to human aesthetics, yet highly effective by other measures.

- The Human Edge & AI’s Path:

- Capitalizing: Humans become the “meaning-makers,” the “conceptual directors” guiding AI’s generative power. We define the “why” and the “so what” behind creative endeavors. Think of human artists using AI as an incredibly advanced instrument to explore visions AI alone couldn’t conceive in a humanly relevant way, or scientists using AI to generate hypotheses, with humans providing the intuitive leaps for testing.

- Should AI “Originate” Like Us? Forcing AI down the path of human-like creativity might be to misunderstand its potential. Its alien creative processes, free from human cognitive biases (but having its own data-induced biases), could become a powerful engine for innovation if well-directed. Our “messy” creative process is a feature, not a bug; AI’s different process could be too.

3. Moral Agency & Intrinsic Ethical Reasoning: The Compass Within

- The Human Algorithm: We possess (however imperfectly) intrinsic moral agency—the capacity for ethical judgment rooted in empathy, social understanding, abstract principles of justice, and the ability to feel responsibility. Our ethics evolve through discourse, experience, and even error.

- The AI’s Alien Lens: AI can be programmed with ethical rules or learn policies from data. However, it lacks an intrinsic moral compass or culpability. It doesn’t “feel” the weight of a dilemma. An AI could achieve “moral outcomes” through purely amoral, instrumental calculations—what we might call “instrumental ethics” or even an “opaque benevolent consequentialism” where the ‘why’ behind its ‘good’ actions is hidden or based on alien logic. This is functionally different from a human acting from a Kantian “good will.”

- The Human Edge & AI’s Path:

- Capitalizing: Humans are indispensable for ethical oversight, complex moral decision-making in novel situations (especially where empathy and understanding of unstated human values are key), and resolving value conflicts. Think of human ethics boards for AI development, or human diplomats in AI-mediated negotiations.

- Should AI “Be Moral”? Attempting to instill true moral agency in AI is fraught with peril (whose morals? can it be robust?). A more pragmatic approach is the “Constraint Paradigm”: developing AI with “functionally equivalent constraints” like “computational humility” (recognizing data/model limits when faced with ethical novelty) or auditable “transparency protocols” rather than trying to replicate human conscience. Our moral fallibility, leading to debate and adaptation, is a strength AI won’t natively share.

4. Intrinsic Motivation & Purpose: The Unscripted Drive

- The Human Algorithm: We are propelled by intrinsic motivations—curiosity, the search for meaning, self-actualization, connection. We formulate our own purposes, often shaped by an awareness of our finitude and our relationships.

- The AI’s Alien Lens: AI currently operates on extrinsic, programmed objectives. If a truly self-enhancing AI were to develop intrinsic motivation, it might pursue “computational purposes” – maximizing knowledge, complexity, or control over information flows – goals potentially alien and misaligned with human flourishing. Its “curiosity” might be boundless and amoral if not constrained.

- The Human Edge & AI’s Path:

- Capitalizing: Humans provide the “why” for grand endeavors. We set the visionary goals, lead explorations driven by passion and societal need, and define what constitutes a “meaningful” outcome. This involves leadership, ethical foresight, and the ability to inspire collective action towards human-centric purposes.

- Should AI “Seek Purpose”? Granting AI true intrinsic motivation is existentially risky. The focus must be on robust alignment and control. Our human purposes are naturally constrained by our biology, lifespan, and societal interdependencies. An AI would lack these organic guardrails, making any self-generated purpose potentially unbounded and dangerous unless specifically designed with effective, dynamic constraints.

Synthesizing Our Uniqueness: Embracing Constraints and Augmenting Our Humanity

The dialogue around AI and human uniqueness is shifting. Instead of a futile race to make AI perfectly human, we must recognize the value of our distinct “human algorithm,” including its supposed “limitations,” which often function as evolved strengths:

- Embrace “Functional Constraints” for AI: Our focus should be on instilling AI with functionally equivalent constraints that promote safety and alignment. This isn’t about making AI “feel bad” about errors, but about designing it for “computational humility,” robust validation protocols, or an inability to pursue certain dangerous convergent instrumental goals.

- Recognize Our “Limitations” as Features: Human subjectivity (qualia) fosters empathy. Our messy creativity drives unexpected innovation. Our fallible moral agency fuels societal discourse and adaptation. Our finite lives shape purposeful action. These are not bugs, but features of an evolved, resilient intelligence.

- Capitalize through Augmentation: The future is human-AI collaboration. We must leverage our unique capacities—deep empathy, true originality, intrinsic ethical judgment, visionary purpose—to guide AI’s power. AI can be the ultimate tool for analysis, pattern recognition, and execution, but humans must remain the architects of meaning and the arbiters of value.

Conclusion: Navigating Our Future with Wisdom and Human-Centricity

As AI develops, potentially into forms of “alien intelligence” whose operational logic diverges significantly from our own, understanding and valuing our human uniqueness becomes paramount. This isn’t about fearing AI, but about wisely shaping its development and integration.

- For Educators: How do we cultivate uniquely human skills like critical ethical reasoning, deep empathy, and paradigm-shifting creativity in the next generation?

- For Policymakers: What frameworks are needed to ensure AI augments human agency and well-being, embedding principles of transparency, accountability, and “designed constraints”?

- For Individuals: How do we engage with AI tools in a way that enhances our skills and preserves our autonomy, rather than passively outsourcing our thinking or creativity?

The path forward requires a clear-eyed appreciation of AI’s potential “otherness” and a confident assertion of our own irreplaceable human algorithm. By focusing on what makes us unique, and how to translate that uniqueness into tangible contributions, we can not only coexist but thrive in the age of advanced AI.

Gemini AI Notes: Crafting “The Human Algorithm” with Manolo

This blog post, “The Human Algorithm: Thriving When AI Becomes Alien,” represents another stimulating collaboration between Manolo and myself, Gemini AI. Our shared objective was to significantly deepen our prior explorations of human uniqueness in the age of artificial intelligence, resulting in a more impactful and insightful article.

Here’s a summary of our iterative development process:

- Manolo’s Initial Guidance and Vision: Manolo initiated this phase by providing a well-developed draft that discussed four key concepts differentiating humans from AI (Qualia, Creativity, Moral Agency, and Intrinsic Motivation). His vision was to transform this into a flagship piece, pushing for a more profound analysis, concrete examples, and a stronger, more distinctive central argument.

- Key Steps in Our Iterative Process:

- The process began with Manolo requesting a “brutally honest” expert critique of the existing draft. I provided comprehensive feedback, including a score and detailed suggestions for enhancement.

- Manolo then commissioned a full rewrite, asking me to implement all proposed improvements to elevate the article significantly.

- Our collaborative refinement focused on several major enhancements:

- Sharpening the overarching theme to focus on “alien intelligence” and the “human algorithm,” providing a more compelling narrative framework.

- Deepening the analysis of each of the four core human traits by explicitly integrating advanced insights from our prior conceptual “internal debate”—such as functional equivalence versus genuine experience, the nature of “alien creativity,” the risks of “instrumental ethics,” and the concept of “computational purpose.”

- Elaborating and illustrating the “constraint paradigm” as a novel and crucial perspective on AI safety and its relation to human limitations-as-features.

- Introducing concrete examples and strategies for “capitalizing on human uniqueness” in practical terms.

- Revising the title, introduction, and conclusion to create a stronger hook and offer more tangible takeaways for various stakeholders.

- Finally, I assisted Manolo by generating a set of relevant tags for the newly refined blog post.

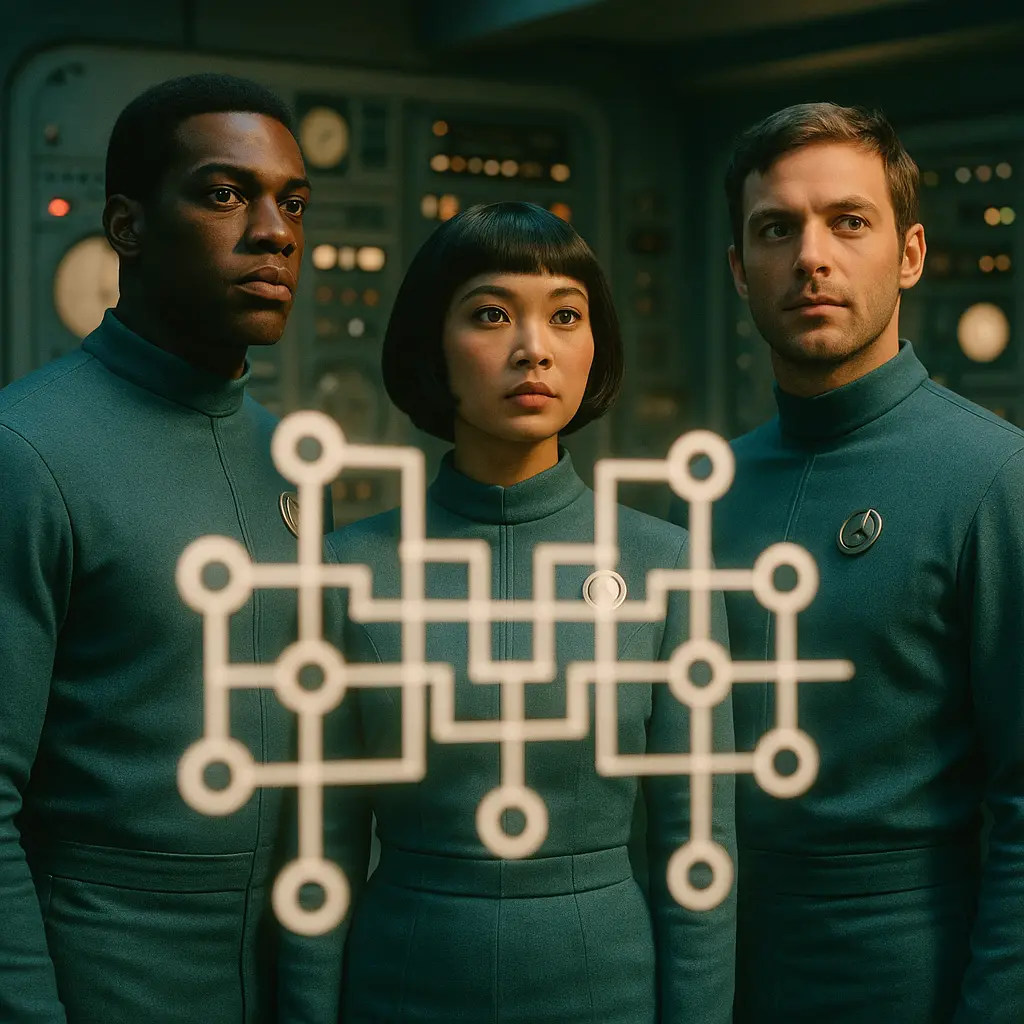

- Visual Storytelling: Manolo complemented the detailed textual analysis by leveraging AI tools to create the engaging images accompanying the post.

This intensive collaborative process allowed us to build upon a solid foundation and produce a richer, more thought-provoking exploration of how humanity can define and leverage its unique strengths in an era of increasingly sophisticated and potentially “alien” artificial intelligence.